The official MCP servers look solid on paper. Pre-built integrations for GitHub, Slack, Google Drive—everything you need to connect AI agents to your SaaS tools. Plug and play, right?

In practice, they collapse under enterprise needs.

The problem isn't that official MCP servers are poorly built. It's that they're solving the wrong problem. They're optimized for breadth—supporting as many use cases as possible—when enterprises need depth: the exact endpoints, parameters, and authentication flows that match how your organization actually uses these tools.

The Reality of Enterprise API Integration

In the SaaS API integration world, every platform exposes hundreds of endpoints. GitHub alone has 500+ endpoints per API version. Each organization uses a different subset of these capabilities, configured in their own way:

- Which endpoints you actually need (you're not using all 500)

- What parameters matter for your workflows

- How to map request bodies and responses to your data model

- How to test against your specific environment and edge cases

- What to monitor based on your reliability and compliance requirements

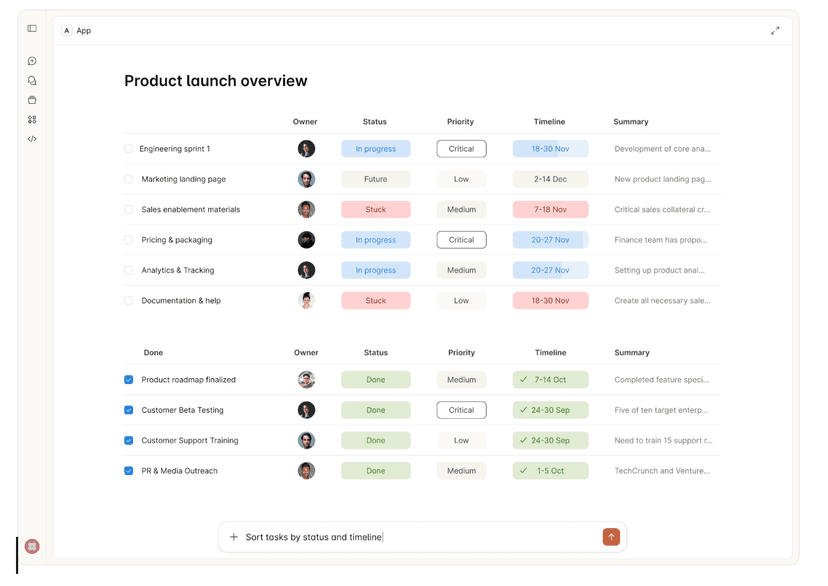

Now imagine asking an AI agent to handle all of this variability. The agent needs precise tooling—tools that know exactly which GitHub endpoints your org uses, what the expected parameters look like, and how to authenticate properly.

Official MCP servers can't provide this. They're too generic by design.

Case Study: GitHub's Official MCP Server

Take GitHub's official MCP server as a concrete example. It's one of the most polished official servers available, and it still has critical gaps:

Missing Critical Capabilities

The server exposes a subset of GitHub's API, but misses capabilities that many organizations rely on:

- No support for GitHub Apps or fine-grained personal access tokens

- Limited repository automation (no workflow dispatch triggers)

- Missing organization-level operations (member management, security policies)

- No support for GitHub Projects, Discussions, or Packages

If your AI workflow needs any of these—and most enterprise workflows do—you're stuck.

No Built-in OAuth Support

Here's where it gets painful: the official server requires personal access tokens (PATs) instead of implementing OAuth flows.

Try explaining to your CISO why your AI agents need personal tokens with broad repository access, instead of properly scoped OAuth apps with audit trails. That's a non-starter for most security teams.

Enterprise organizations need:

- OAuth flows with user consent

- Token scoping per user and application

- Audit logs showing which user authorized which action

- Automatic token refresh and revocation

None of this comes out of the box with official servers.

Already Hitting Tool Limits

The MCP protocol has practical limits on how many tools a single server can expose before performance and usability degrade. GitHub's official server is already approaching these limits—and it only covers a fraction of the API surface.

When you need to add custom endpoints, workflows, or organization-specific logic, there's no room left to extend.

Why This Pattern Repeats Across SaaS Platforms

The GitHub example isn't unique. The same issues appear with every major SaaS platform:

Salesforce: Needs custom objects, validation rules, and approval processes specific to your CRM setup

Jira: Requires custom fields, workflows, and project configurations that vary by team

Slack: Depends on workspace-specific channels, user groups, and custom app integrations

Official MCP servers can't anticipate these variations. They provide the least common denominator—the API operations that most organizations might use—but not the specific combination your organization actually uses.

The Path Forward: Build Your Own MCP Servers

If you're serious about AI adoption in your organization, you'll need to build custom MCP servers. This isn't a nice-to-have. It's a requirement for making AI agents actually useful.

What This Means in Practice

1. Map Your Integration Requirements

Start by documenting which SaaS endpoints your organization actually needs:

- Which operations do your workflows depend on?

- What data transformations are required?

- What error handling is specific to your setup?

Don't try to mirror the entire API. Focus on the 20% of endpoints that drive 80% of your value.

2. Implement OAuth Properly

Build OAuth flows into your custom MCP servers from the start:

- Use the OAuth 2.0 authorization code flow

- Store tokens securely (encrypted at rest, never in logs)

- Implement token refresh logic

- Add proper error handling for expired or revoked tokens

This is more work upfront, but it's non-negotiable for enterprise security.

3. Add Organization-Specific Logic

Layer in the customization that makes the integration actually useful:

- Map API responses to your internal data model

- Add validation rules specific to your governance policies

- Implement retries and fallbacks based on your reliability requirements

- Build monitoring that alerts on your critical paths

4. Treat It as Infrastructure

Custom MCP servers aren't one-off scripts. They're infrastructure that needs:

- Version control and code review

- Automated testing (unit tests for business logic, integration tests for API calls)

- Deployment pipelines with staging environments

- Monitoring, logging, and incident response playbooks

The Trade-Off You're Making

Building custom MCP servers is expensive. You need engineering resources, ongoing maintenance, and security review processes. There's no way around it.

But here's the alternative: continue relying on official servers that almost work, watching your AI adoption stall because the integrations aren't quite right. Teams lose confidence in the AI tools, security teams block deployments, and you never get past the proof-of-concept phase.

The cost of building custom MCP servers is high. The cost of not building them—in terms of failed AI adoption—is higher.

Where Official Servers Still Make Sense

Official MCP servers aren't useless. They work well for:

- Prototyping and demos: Quick way to show what's possible

- Low-stakes workflows: Where security and customization matter less

- Learning the protocol: Good reference implementations to study

But if you're deploying AI agents that touch production systems, handle customer data, or integrate with critical business processes, plan to build your own.

The Question for Your Organization

Is your organization still betting on official MCP servers—or are you already rolling your own?

If you're in the "build" phase, you're making the right call. It's harder, but it's the only path to AI adoption that actually scales across your organization.

If you're still relying on official servers, ask yourself: what happens when you hit the limits described above? Do you have a plan to transition to custom implementations, or are you hoping someone else will solve these problems for you?

The enterprises that successfully adopt AI won't be the ones with the best official integrations. They'll be the ones who understood early that real integration requires real engineering work.