Last Sunday at the Cursor Tel Aviv Meetup, I shared what's next for the Model Context Protocol in Cursor. The room was packed with developers who, like me, have been watching MCP evolve from an interesting spec into something that's actually changing how we build with AI.

Four new features caught my attention: Prompts, Resources, Elicitation, and Dynamic Tools. Each one adds precision to context, and that precision directly impacts output quality. If you're building MCP servers or using Cursor daily, these aren't just nice-to-haves—they're the new baseline for MCP UX.

Why MCP Context Precision Matters

Before diving into the features, here's the core problem they solve: AI coding assistants are only as good as the context they receive. Generic tool descriptions and scattered information lead to mediocre results. The new MCP features in Cursor address this by giving developers explicit control over how context gets delivered to the model.

MCP acts like USB-C for AI—one standardized protocol that lets models plug into any system without custom integrations each time. With over 1,000 available MCP servers and 80+ compatible clients, it's rapidly becoming the de facto standard. OpenAI and Google have already adopted it. These four features represent the next evolution of that standard.

Feature 1: Prompts - Reusable Workflow Templates

Prompts are pre-built instruction templates that live in your MCP server. Think of them as slash commands, but smarter—they encapsulate complex workflows that would otherwise require multiple back-and-forth exchanges.

How Prompts Work

The user decides when to invoke a prompt. When they do, the MCP server sends a complete, structured instruction to the model, along with any dynamic context needed for that specific invocation.

// MCP Server: Prompt definition server.setRequestHandler( ListPromptsRequestSchema, async (request, { authInfo }) => { // Return list of available prompts }, ) server.setRequestHandler( GetPromptRequestSchema, async (request, { authInfo }) => { // Return prompt content for specific workflow }, )

Practical Use Cases

In my own workflow, I've built prompts for:

- Generate PRD from Linear ticket: Pulls the ticket data, analyzes attached Figma designs, combines everything into a structured product requirements document using a company-specific template

- Create component with design system rules: Automatically includes design system guidelines, accessibility requirements, and generates implementation that follows our conventions

- Send meeting summary to attendees: Extracts action items, formats them properly, and prepares the email draft with appropriate context

The key difference from just writing good prompts manually? Reusability and distribution. Once you've nailed a workflow, everyone on your team gets access to it through their MCP gateway. No more copying prompt templates into Notion docs.

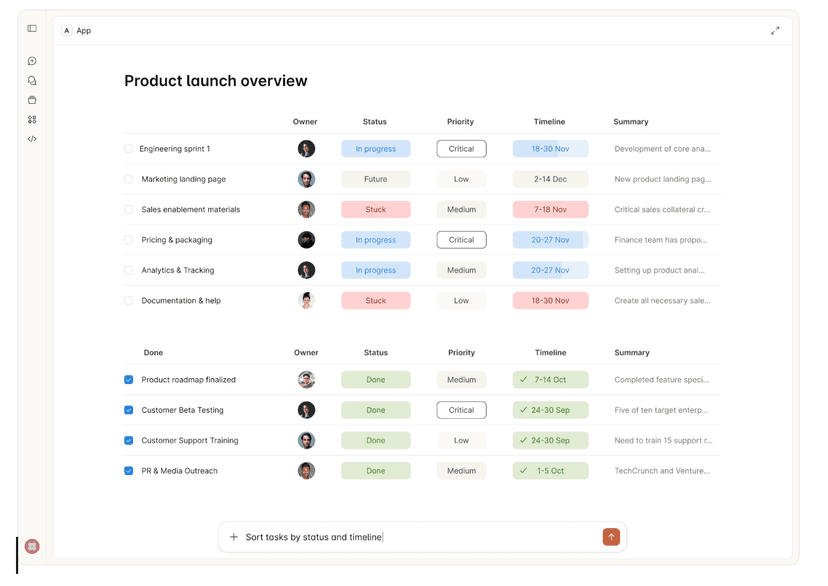

In Cursor, prompts appear as autocomplete options when you type / followed by your trigger. For developers building MCP servers: invest time in crafting these prompts. They dramatically improve adoption because users get immediate value without learning curve.

Feature 2: Resources - Dynamic Context Injection

Resources are structured data that the AI application can fetch and inject into context automatically, based on what the model needs.

The Resource Flow

Unlike prompts (user-initiated), resources are application-initiated. The model determines when it needs additional context, then requests specific resources from your MCP server.

// Resource Discovery server.setRequestHandler( ListResourcesRequestSchema, async (request, { authInfo }) => { // List available resources }, ) // Resource Access server.setRequestHandler( ReadResourceRequestSchema, async (request, { authInfo }) => { // Return specific resource content }, )

Real-World Application

I use resources for internal documentation that shouldn't be permanently loaded into context but needs to be available when relevant. Examples:

- Troubleshooting guides: When Cursor encounters a "500 error" in our MCP client implementation, it can fetch the troubleshooting resource that explains common causes and fixes

- API specifications: Instead of cluttering the context with entire API docs, the model fetches only the relevant endpoint documentation when needed

- Coding standards: Team-specific patterns that apply to particular file types or frameworks

The resource system also supports subscriptions—your MCP server can notify the client when resource content changes, keeping the model's context fresh without manual reloads.

Feature 3: Elicitation - Interactive User Input

Elicitation is the most underrated feature in this release. It lets MCP servers request additional information from users through structured UI forms during tool execution.

Why This Matters

Previously, if an MCP tool needed clarification, the model had to guess, make assumptions, or fail. Elicitation changes that dynamic entirely—the server can pause execution and ask the user directly.

The server sends a schema defining what inputs it needs:

{ "jsonrpc": "2.0", "id": 2, "method": "elicitation/create", "params": { "message": "Please provide your contact information", "requestedSchema": { "type": "object", "properties": { "name": { "type": "string", "description": "Your full name" }, "email": { "type": "string", "format": "email", "description": "Your email address" }, "age": { "type": "number", "minimum": 18, "description": "Your age" } }, "required": ["name", "email"] } } }

Cursor renders this as a native form. The user fills it out, and the MCP server receives structured data it can trust.

Practical Applications

Confirmation before destructive actions: Before deleting a GitHub repository, the elicitation prompts the user to type the repo name as confirmation—exactly like GitHub's web UI. This prevents catastrophic mistakes from overeager AI execution.

Gathering missing parameters: When creating a calendar event, instead of letting the model guess the duration or attendees, elicitation can explicitly ask the user to specify these details.

Multi-step workflows: Complex operations that require human judgment at decision points can now pause, gather input, and continue seamlessly.

Currently, Cursor supports four schema types for elicitation: string, number, boolean, and enum. This covers most use cases, though I expect we'll see more complex types (like file uploads or date pickers) in future implementations.

Security Implications

Elicitation is your safety net. Before any high-impact action—sending emails, making API calls that cost money, modifying production data—prompt for explicit confirmation. This is how you build MCP servers that enterprises can actually trust.

Feature 4: Dynamic Tools - Solving Context Window Limits

Here's a problem every Cursor power user hits: tool limit warnings. Most models cap the number of tools they can handle at around 30-80. If your MCP server exposes 1,000+ tools (entirely possible when connecting to systems like Linear, Jira, Figma, and internal APIs), you run into performance degradation or outright failures.

Dynamic tools solve this with a clever workaround.

The Pattern

- Your MCP server exposes a limited set of "always-available" tools (say, 30)

- One of these tools is

add_tools, which accepts tool categories or names as parameters - When the model calls

add_tools("figma", "github"), the server sends atools/list_changednotification - The MCP client fetches the updated tool list, which now includes Figma and GitHub tools

- The oldest tools (based on last-used timestamp) get evicted from the active set to stay under the limit

// Simplified dynamic tool implementation let activeTools = new Set(['add_tools', 'search', 'calendar_list']) const toolUsage = new Map() // Track last usage function addTools(categories: string[]) { for (const category of categories) { const newTools = getToolsForCategory(category) // Add new tools for (const tool of newTools) { activeTools.add(tool) toolUsage.set(tool, Date.now()) } // Evict least recently used if over limit while (activeTools.size > 30) { const lru = getLeastRecentlyUsed(toolUsage) activeTools.delete(lru) } } // Notify client of changes server.sendNotification('tools/list_changed') }

Why This Works

The model intelligently decides which tools it needs based on the task at hand. Working on a pull request? It loads GitHub tools. Designing a component? It loads Figma and design system tools. You get access to your entire toolkit without overwhelming the context window.

At Webrix, we use this pattern to expose 100+ internal tools through a single MCP connection. The model starts with high-level tools like search_company_tools, then dynamically loads the specific integrations it determines are relevant.

Implementation Notes

When implementing dynamic tools, consider these patterns:

- Category-based loading: Group related tools (e.g., "database", "monitoring", "deployment")

- Semantic search: Let the model describe what it needs, then load matching tools

- Usage-based eviction: Keep frequently-used tools in the active set longer

- Explicit user control: Allow users to "pin" certain tools that should always be available

Building Better MCP Servers

These four features shift MCP from "interesting protocol" to "essential infrastructure." If you're developing MCP servers, here's my advice:

Start with prompts. They provide immediate value and don't require complex implementation. Identify your team's top 5-10 repetitive workflows and encode them as prompts.

Add resources strategically. Don't dump everything into resources—be selective. Focus on documentation that's frequently needed but too large to keep in permanent context.

Use elicitation for safety. Any tool that can cause damage, cost money, or affect other people should confirm intent through elicitation before executing.

Plan for dynamic tools early. If your server will eventually expose more than 50 tools, implement dynamic loading from the start. Retrofitting it later is painful.

What's Next

The MCP spec continues to evolve rapidly. Features currently in discussion include:

- Streaming resources: For large files or real-time data that updates continuously

- Richer elicitation types: File uploads, multi-select, conditional fields

- Cross-server composition: Allowing one MCP server to invoke tools from another

- Memory primitives: Persistent state across sessions

If you're serious about AI-assisted development, now is the time to invest in understanding MCP deeply. The protocol is becoming infrastructure—similar to how HTTP is infrastructure for web apps.

Try It Yourself

Want to experience these features? Here's how to get started:

- Update Cursor to the latest version (these features shipped in 0.42+)

- Install an MCP server that implements these features. The official MCP servers repository has examples

- Or use Webrix MCP Gateway for enterprise-grade security and access to 100+ pre-built integrations

The shift from basic tool calling to contextually-aware, interactive, dynamically-loaded capabilities is substantial. These aren't incremental improvements—they're architectural changes in how AI assistants access and use information.

If you're building MCP servers: implement these features. They're not optional anymore; they're what users expect.

If you're using Cursor: learn to leverage them effectively. The developers who master prompt invocation, understand when to request resources, and design workflows around elicitation will ship faster and with higher quality.

The future of AI-assisted development isn't just about smarter models—it's about smarter protocols for connecting those models to the systems we actually use.

Want to see this in action? The Webrix MCP Gateway implements all four features with enterprise-grade security. Try it free and connect your entire toolchain through a single secure gateway.