Last month at the MCP Dev Summit in London, I had the opportunity to share some hard lessons we've learned at Webrix about tool management in enterprise AI systems. The talk focused on a problem that seems counterintuitive at first: giving your AI agent access to more tools can actually make it perform worse.

The Cost of Context Overload

Here's what we discovered: when you connect an AI agent to dozens (or hundreds) of enterprise tools—GitHub, Slack, Jira, Figma, Linear, and so on—you don't get a "Super Agent." You get chaos.

The costs are real and measurable:

- Token burn: Every tool description consumes context window space before the agent even takes an action. With 200 tools, you might burn thousands of tokens just loading tool metadata.

- Attention loss: LLMs suffer from "attention degradation" when presented with too many options. They make wrong assumptions or choose familiar-sounding tools that aren't optimal for the task.

- Expensive mistakes: We've seen agents accidentally Slack entire companies with sensitive data, or make API calls that cost real money—all because they had too many tools and not enough clarity.

Why Common Solutions Fall Short

I walked through four approaches to managing tool overload, showing why the first three don't scale:

1. Disable Tools (Too Restrictive)

The simplest solution: just turn off tools you don't need. But this fails when different roles need different tool combinations. A Product Manager needs "everything"—design tools, project management, communication, analytics.

2. Static Toolkits (One Size Doesn't Fit All)

Creating pre-defined toolkits per role (e.g., "PM Toolkit," "Engineer Toolkit") sounds good in theory. But real work doesn't fit into neat boxes. The moment someone needs a tool outside their kit, the whole system breaks down.

3. Search and Call (Deeply Flawed)

This is the most common enterprise pattern: add a "search_available_tools" function that the agent calls to find what it needs. The problems:

- The LLM often doesn't realize it needs to search first

- It wastes tokens on unnecessary search calls

- Search results become just another context bloat problem

The Dynamic MCP Solution

The breakthrough came from leveraging a lesser-known MCP protocol feature: tools/list_changed notifications.

Here's how Dynamic MCP (DMCP) works:

- The agent starts with a minimal set of core tools (~20-30)

- Based on the user's current session, task, or context, the MCP server intelligently selects which additional tools to expose

- The server sends a

tools/list_changednotification - The client automatically fetches the updated, contextually-relevant tool list

- Old, unused tools get evicted using LRU (Least Recently Used) logic

The key insight: The agent only sees the tools it actually needs for the current task, without having to decide what to load. The decision happens at the infrastructure layer, not at the model layer.

This is ideal when you need access to hundreds of tools but only use a small subset repeatedly. It's how we manage 100+ integrations at Webrix without overwhelming the context window.

The Future: Agent-to-Agent Workflows (A2A)

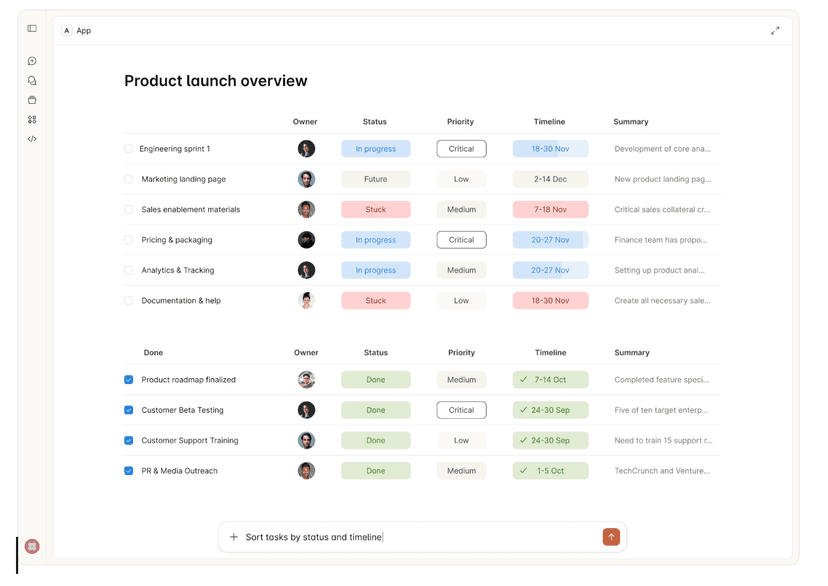

I ended the talk with what I believe is the logical conclusion of this approach: moving from monolithic "Super Agents" to specialized agent workforces.

Instead of building one agent that does everything poorly, imagine:

- A Notify Agent that handles all communication (whether it's Gmail, Slack, or SMS)

- A Research Agent specialized in gathering and synthesizing information

- A Code Agent focused purely on development tasks

- A PM Agent that coordinates the others

Each agent maintains a focused set of tools. They collaborate through standardized interfaces. The result: more efficient, more accurate, and more cost-effective than trying to build a single agent with access to everything.

This is the A2A (Agent-to-Agent) future we're building toward at Webrix.

Watch the Full Talk

The complete presentation dives deeper into implementation patterns, benchmarks, and architectural trade-offs. If you're building enterprise AI systems or struggling with tool management in your agents, this is worth watching:

Key Takeaways

If you're implementing MCP in production:

- Don't assume more tools = better results. Context window management is critical.

- Avoid search-based tool discovery. It shifts the burden to the LLM and rarely works well.

- Leverage dynamic tool loading using the

tools/list_changednotification pattern. - Think in terms of specialized agents, not monolithic super-agents.

The shift from static to dynamic tool management isn't just an optimization—it's a fundamental architectural change in how we build reliable AI systems.

Building enterprise AI with MCP? Try Webrix to see Dynamic MCP in action with 100+ pre-built integrations and enterprise-grade security.